Operating System

Operating System

What is Operating System?

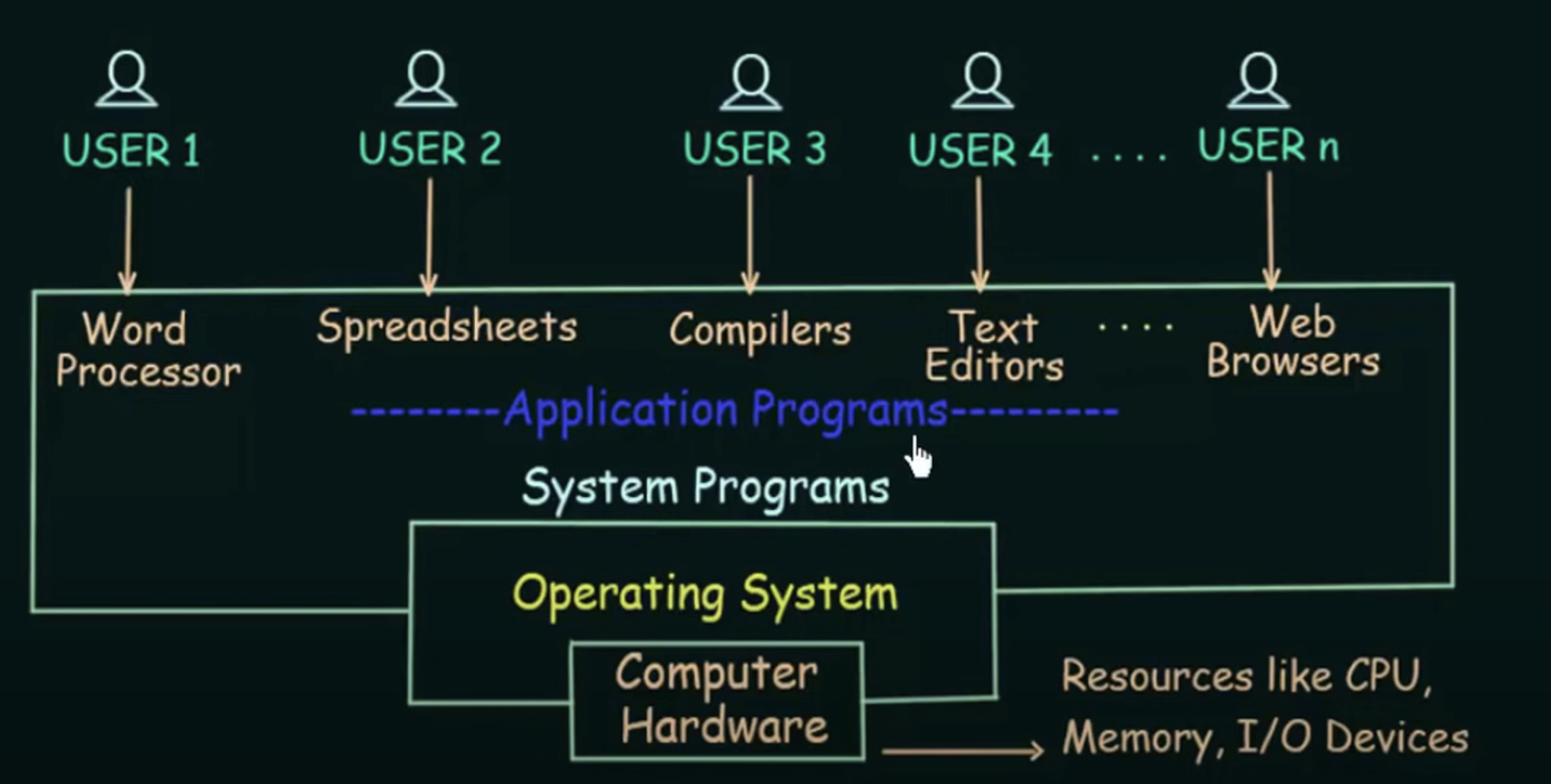

It serves as an intermediary between computer User and computer Hardware

Specificlly,

$$

\text{User} \rightarrow (\text{use}) \text{Application Programs} \rightarrow (\text{needs}) \text{Operating System} \rightarrow {\text{(to connect to)}} \text{Hardware}

$$

Without operating systems, even doing the easiest things, for example using word processor, e.g.. Microsoft Word, to type something, we need to code to tell the hardware about how they should handling my inputs, and where should it be saved in the memory, etc.. Really tedious, isn’t it?

So the functions of operating systems are:

- Be an interface between User & Hardware

- Allocation of Resources

- Management of Memory, Security, etc.

Basics of Operating Systems

Modern Computer System

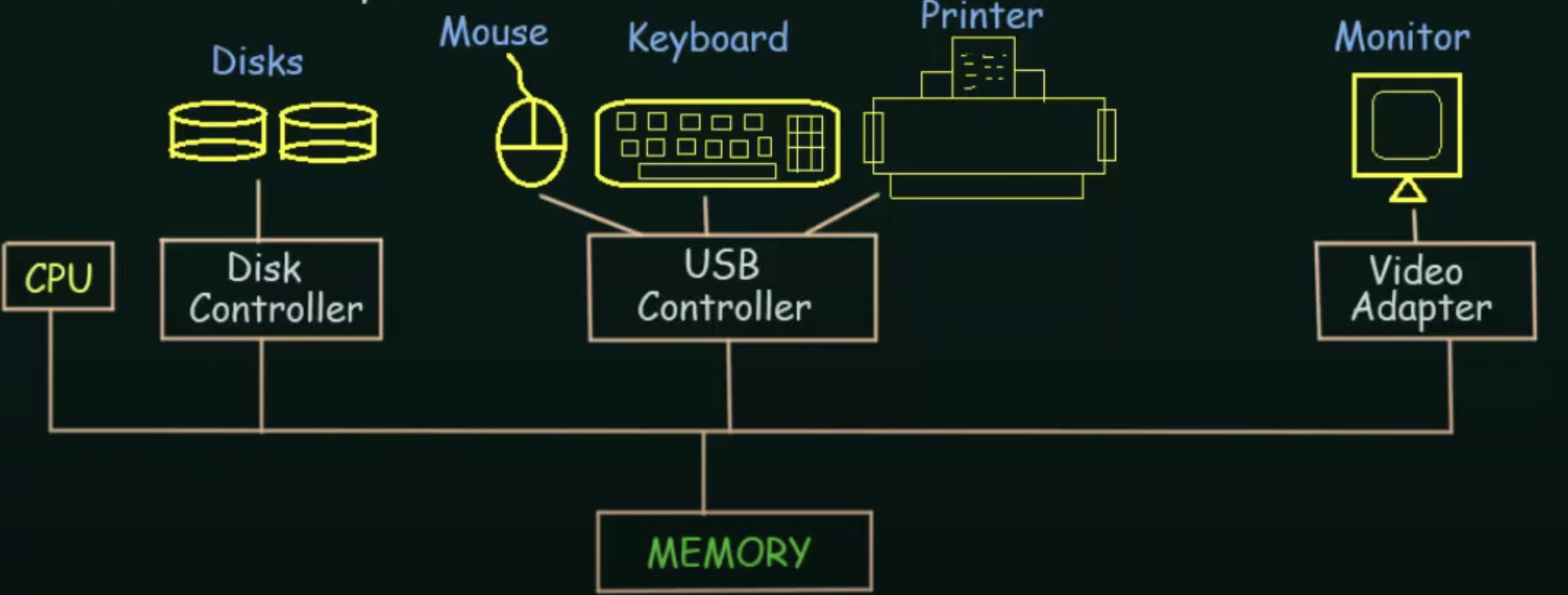

A modern general-purpose computer system consists of one or more CPUs and a number of device controllers connected through a common bus that provides access to shared memory

The graph explanation of the sentence is as follows:

The line refers to the common bus

Then,

Each device controller is in charge of a specific type of device. Each device controller maintains:

Local Buffer Storage

Set of Special Purpose Registers

And OS has a device driver for each device controller. The purpose of device driver is to presents a uniform interface to allow the device controller to interact with the operating system

The CPU and the device controllers can execute concurrently, competing for memory cycles.

How to understand this word?

First of all, we need to be clear that whenever something has to be executed or loaded, it has to be loaded into the main memory, i.e., RAM. And the power of system can be performed by “execute concurrently”, which ensures that your controls will not be responsed with lags.

And to achieve this goal, it needs:

To ensure orderly access to the shared memory, a memory controller is provided whose function is to synchronize access to the memory

Terms You Need to Know

Throughput:

- Throughput is a measure of how many units of information a system can process in a given amount of time, i.e., a measure of the performance of the system

- Higher throughput, better the performance

Bootstrap Program:

The initial program that runs when the computer is powered up or rebooted

It is stored in ROM (Read Only Memory)

It must know how to load the OS and start executing that system

Explanation: OS is also a program, and is stored in the secondary memory intially. And since the bootstrap program is the first program executed when starting the computer, it must know where the OS program is and invoke it

It must locate and load the OS Kernel into memory

Explanation: What is Kernel? It is the main part of operating system. It is the software of first level in OS

Interrupt:

The occurence of an event is usually signalled by an Interrupt from Hardware or Software

Explanation: CPU is always working on some tasks. But sometimes a hardware or software may tell the CPU that “pls do this task first, for it is more important”. It is called Interrupt

Hardware may trigger an interrupt at any time by sending a signal to the CPU, usually by the way of the system bus

System Call (Monitor Call):

- Software may trigger an interrupt by executing a special operation called System Call

So for hardware, we usually call it Interrupt, and for software is System Call

Then how will the CPU react to interrupts?

When the CPU is interrupted, it stops what it is doing and immediately transfers execution to a fixed location

Fixed Location: The fixed location usually contains the starting address where the Service Routine of the interrupt is located

- Service Routine: What the interrupt wants to do is written in the Service Routine. Sometimes Interrupt Service Routine is known as I.S.R.

So the fixed location indicates the address where CPU should start executing what the interrupt wants to do

Then, the I.S.R. executes

On completion, the CPU resumes the interrupted computation, i.e., goes back to where it comes from and resumes the original work

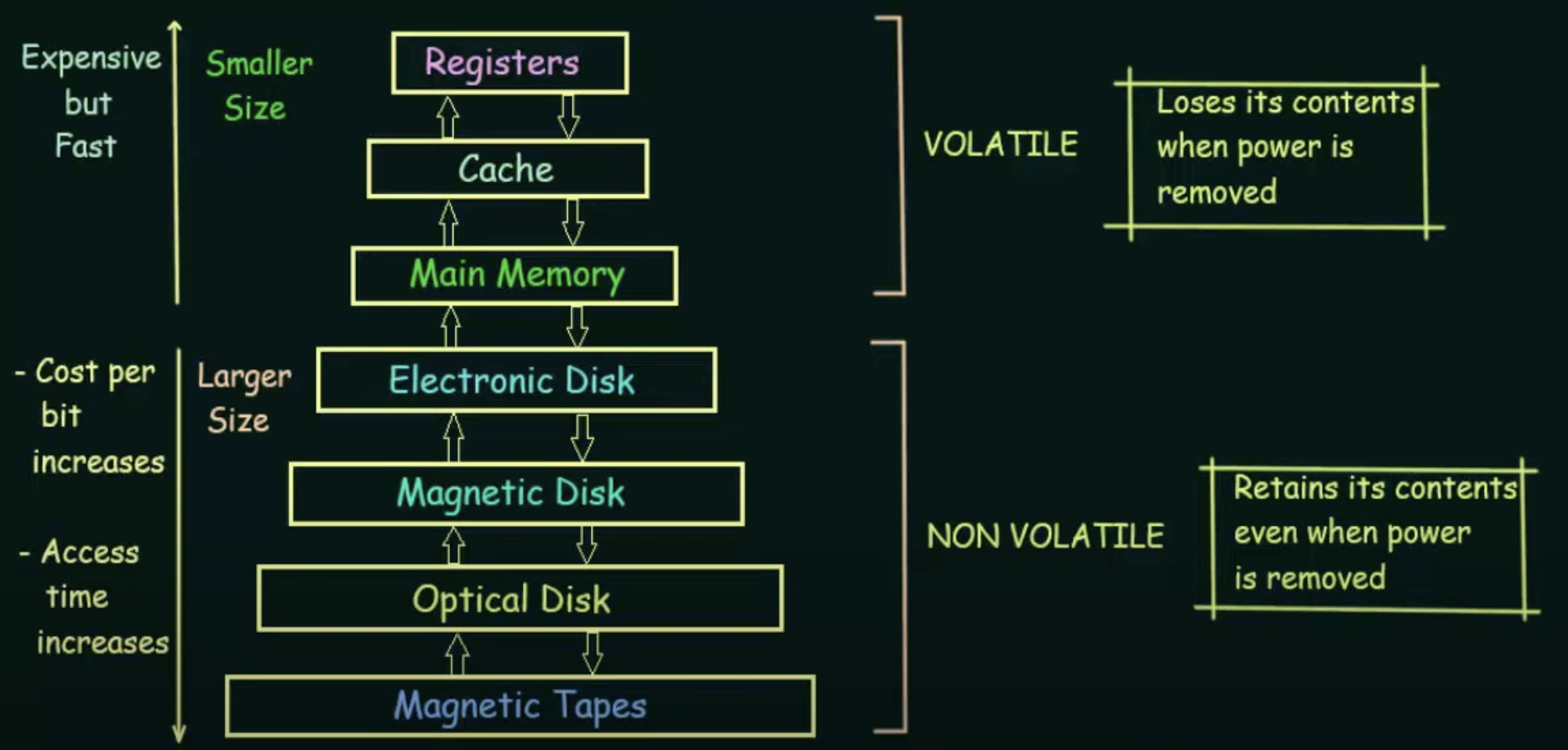

Storage Structure

- Register: The smallest storage device. It only stores data in bits, i.e., 0’s or 1’s. Therefore, it can be accessed quickly

- Cache: A little bigger than the register, but slower

- Main Memory: RAM (Random Access Memory)

- Secondary Storage Devices: It refers to Electronic Disks, Magnetic Disks, Optical Disks, and Magnetic Tapes

Main Memory (RAM)

Everything needed for execution will be loaded into main memory

So things are stored in secondary memory, but loaded in main memory for execution purpose

Although the main memory is fast, its size is limited (which means you cannot store all your programs & data in main memory) and is also volatile in nature. And that’s why we need secondary memory to save unused stuff

Volatile: Loses its contents when power is removed

Non-Volatile: Retains its contents even when power is removed

So if you have a bigger main memory, your computer works faster because it saves time on taking some data out from secondary memory and put into main memory

I/O

Working of an I/O Structure

To start an I/O operation, the device driver loads the appropriate registers (those which are required for performing a paticular I/O operation) within the device controller

These required registers have the information or data about what is the action, or what is the exact I/O operation that has to be performed

The device controller, in turn, examines the contents of these registers to determine what action to take

The controller starts the transfer of data from the device to its local buffer

Once the transfer of data is complete, the device controller informs the device driver via an interrupt that it has finished its operation

The device driver returns control to the operating system

The graph of this process is as follows (without DMA):

As you can see, we always need to pass through CPU to store our data into memory during I/O process

This form of interrupt-drive I/O is fine for moving small amounts of data, but can produce high overhead when used for bulk data movement, for CPU is always interrupted over here, which means it takes up too much of the CPU and time.

To solve this problem, Direct Memory Access (DMA) is used.

DMA: After setting up buffers, pointers, and counters for the I/O device, the device controller transfers an entire block of data directly to or from its own buffer storage to memory, with no intervention by the CPU

And with DMA, we can send data in blocks instead sending through CPU respectively

Only one interrupt is generated per block, to tell the device driver that the operation has completed

While the device controller is performing these operations, the CPU is available to accomplish other works

Computer System Architecture

Computer systems can be categorized based on number of General Purpose Processors:

- Single Processor System

- Multiprocessor System

- Clustered System: Two or more system are clustered to perform certain tasks

Single Processor System

One main CPU capable of executing a general purpose instruction set including instructions from user processes

In fact, apart from the main GPU, there are still other special purpose processors in single processor system, but they do not do general purpose tasks but perform some device specific tasks

Multiprocessor System

- Also known as parallel systems or tightly coupled systems

- Has two or more processors in close communication, sharing the computer bus and sometimes the clock, memory, and peripheral devices

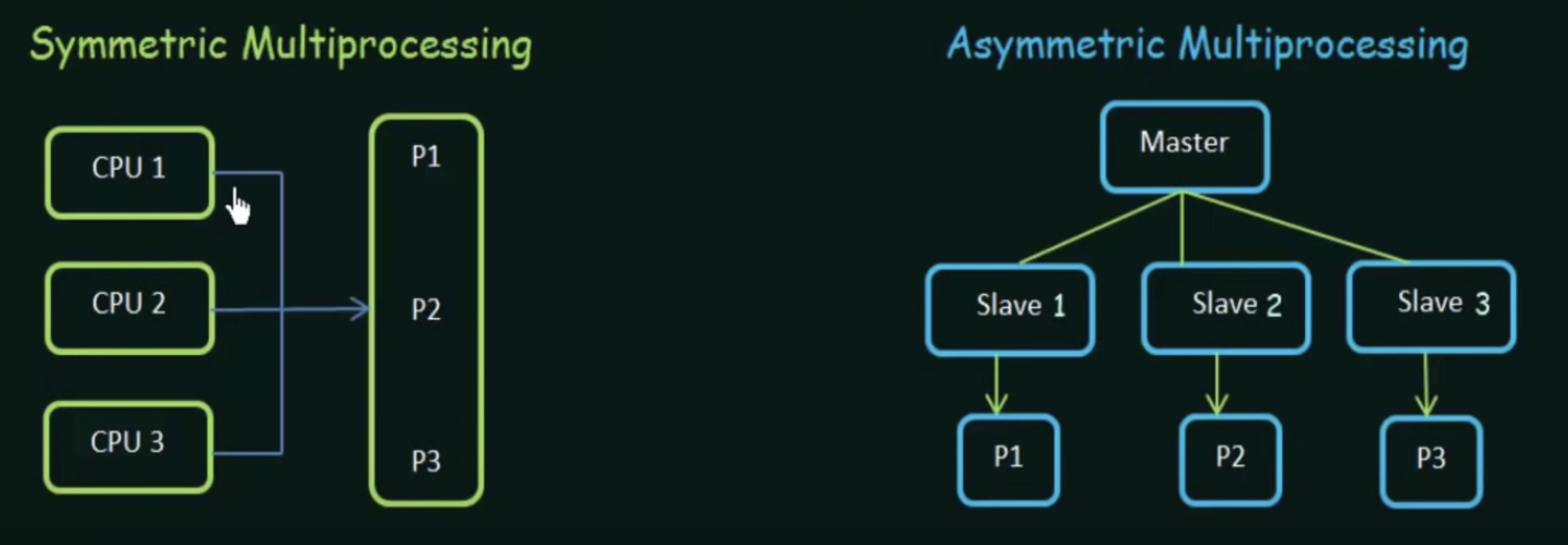

Types of Multiprocessor System

- Symmetric Multiprocessing: All CPU are similar, together working on the sams set of processes

- Asymmetric Muliprocessing: One CPU acts as the master, others as slaves. Master assigns tasks to slaves to let them do their works on their own

Clustered System

- Like multiprocessor systems, clustered systems gather together multiple CPUs to accomplish computational work

- They are composed of two or more individual systems coupled together

- Provides high availibility

- Can be structrued asymmetrically or symmetrically

Operating System Structure

Operating systems vary greatly in their makeup internally, but they need to have some commonalities:

- Multiprogramming

- Multitasking (Time Sharing)

Multiprogramming

Multiprogramming means the capbility of running multiple programs by the CPU

With multiprogramming, in general, keep either the CPU or the I/O devices busy at all times

It also means without multiprogramming, they can be busy at all times

Multiprogramming increases CPU utilization by organizing jobs (code and data) so that the CPU always has one to execute

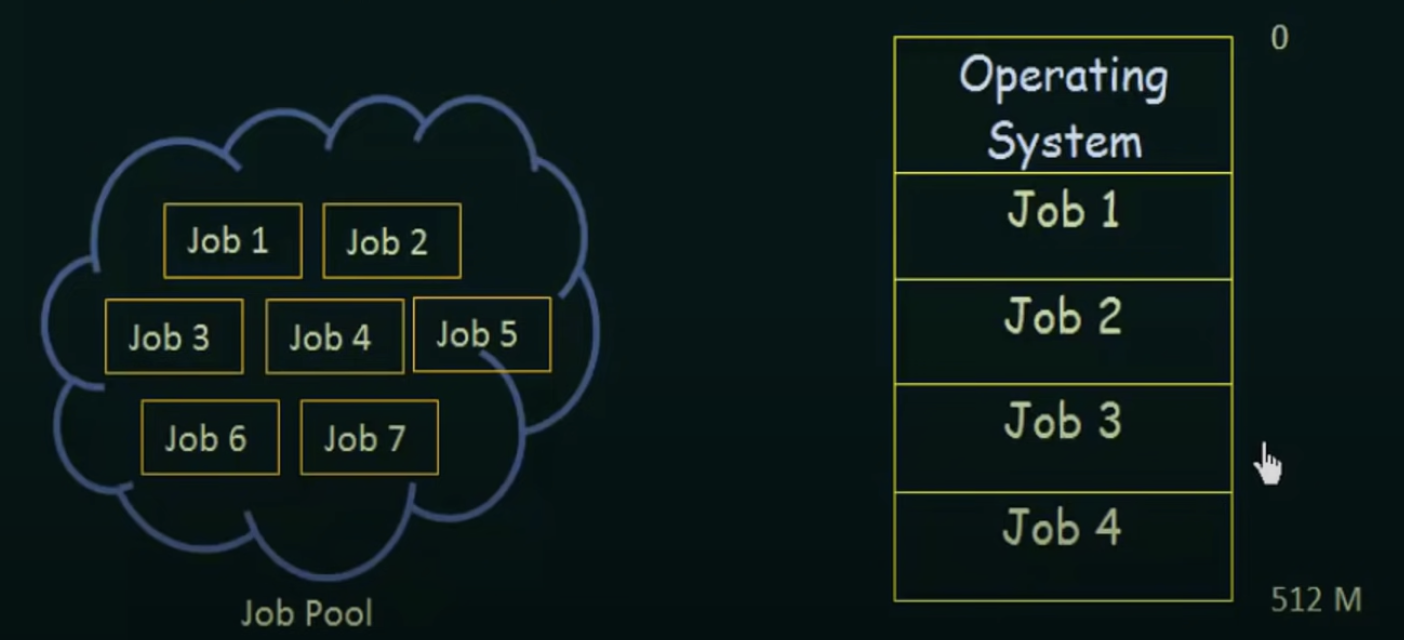

Now we discuss it by graph:

Suppose now we have some jobs to be executed, and they are all in a Job Pool. Since the memory(RAM) is not unlimited, we can only load a part of jobs in the Job Pool into it

If without multiprogramming, the CPU can only be used by Job 2 until Job 1 is finished, even though Job 1 may only make use of I/O devices instead of CPU itself

With multiprogramming, Job 2 should be able to use the CPU when Job 1 is only using I/O devices

Therefore, in conclusion, multiprogramming enables that whenever a particular job does not want to use the CPU, the CPU can be utilized by another job

Multitasking (Time Sharing)

CPU executes multiple jobs by switching among them

Switches occur so frequently that the users can interact with each program while it is running

Time sharing requires an interactive (or hands-on) computer system which provides direct communication between the user and the system

A time-shared operating system allows many users to share the computer simultaneously

It is because the system switches so fast that users don’t realize they are sharing the system

Suppose we have multiple users sharing the same system right now

In the time-shared system, it uses CPU scheduling and multiprogramming to provide each user with a small portion of a time-shared system

Each user has at least one separate program to be executed

A program loaded into memory and executing is called a PROCESS

Operating System Services

An OS provides an environment for the execution of programs

It provides certain services to programs and to users of those programs

Below are services provided by the OS listed

User Interface

- Commend Line Interface (CLI)

- Graphicla User Interface (GUI)

Program Execution

OS should be able to load the program into memory, and then run the program

Process of program execution:

$$

\text{Source Code $\rightarrow$ Compiler $\rightarrow$ Object Code $\rightarrow$ Executor $\rightarrow$ Output}

$$

I/O Operations

Although you feel like you’re using the input devices like mouse & keyboard directly, but in fact it is OS that actually controls the usage of the I/O devices

File System Manipulation

For example, sometimes you need to create or delete files. These operations are as well controlled by the operating system

And also it controls the permission that is given to certain programs or users for the access to certain files

Communications

Communication between processes

Communication (e.g. information exchange) between processes (in same computer or between different computers) is controlled by the OS

Error Detection

If some error occurs the system must not break down completely and it should not just seize your computing ability completely. The OS must have a way in which it manages those errors so that your computing is consistent and it is still carried on even if some errors are encountered

Resource Allowcation

The OS must allocate the resources in an efficient way such that all the processes get the resources that they need and no process keeps waiting for it and never gets it. And there also should not be a scenario where a resource is held by a particular process but never released

Accounting

The OS keeps track of which user use how much and what kind of resources. This record keeping may be used for accounting or simply for accumulating usage statistics

Protection and Security

Protection:

When several different processes are executing at the same time, it should not be possible for one process to interfere with the others or with the OS itself

Protection means access to the system resources must be controlled

Security

- The system should not be accessible to outsiders who are not allowed to access the system

User Operating System Interface

Two fundamental approaches for users to interact with the operating system:

- CLI, also as known as Command Interpreter

- GUI

Command Interpreter

Some operating systems include the command interpreter in the kernel

Others, such as Windows XP and UNIX, treat the command interpreter as a program

On systems with multiple command interpreters to choose from, the interpreters are known as shells. For example,

- Bourne shell

- C shell

- Bourne-Again Shell (BASH)

- Korn Shell

System Calls

System calls provide an interface to the services made available by an Operating System

First we need to know that a program can be executed in two kinds of mode

- User mode

- Kernel mode

In User mode, the program does not have direct access to the memory. In contrast, it has in Kernel mode, so it is as well said as Privileged mode

But we need to note that when the system would following the program to crash when it is in Kernel mode. Instead, user mode would not, which means it executes in a safer mode

Now comes to the use of system calls. When the program is in User mode, it would make a system call to the OS whenever it needs resources. Then it would switches from User mode to Kernel mode, which is called Context Switching

Types of System Calls

- Process Control

- File Manipulation

- Device Management

- There’s a point inside device management that needs to be noted is a content of type: logically attach or detach device

- Physically attach means we plug a device into it the system manually

- Logically attach means the OS needs to understand that that the device has been attached is ready to use; Logically detaching means logically removing the device from the OS, e.g., pan-drive ejection

- There’s a point inside device management that needs to be noted is a content of type: logically attach or detach device

- Information Maintenance

- It means all the information that we have about the system must be maintained and updated, e.g., data & time

- Communications

- It refers to system calls that are used for communication between different processes or different devices

- So the functionalities of this type of system call may content

- create, delete communication connection

- send, receive messages

- transfer status information

- attach or detach remote devices

System Programs

Let’s first take a look on the computer system hierarchy. System programs lies between application programs & the OS

- System programs provide a convenient environment for program development and execution

- Some of them are simply user interfaces to system calls

- Others are considerably more complex

And there are several types of system programs

- File Management: For example, list, and generally manipulate files and directories

- Status Information: For example, ask the system for date, time, and number of users

- File Modification: The difference between File Management & File Modification is that the former one is about the outer layer of files, e.g., creating, deleting, renaming, copying, etc.., the latter one is about the inner layer of files, e.g., modifiying the contents of files

- Programming Language Support: For example, compilers, assemblers, debuggers, and interpreters

- Program Loading and Execution: Once a program is assembled or compiled, it must be loaded into memory to be executed

- Communications:

- Creating virtual connections among processes, users, and computer systems

- Allowing users to send messages to one another’s screens

- To browse webpages

- To send electronic-mail messages

- To log in remotely or to transfer files from one machine to another

Structures of Operating System

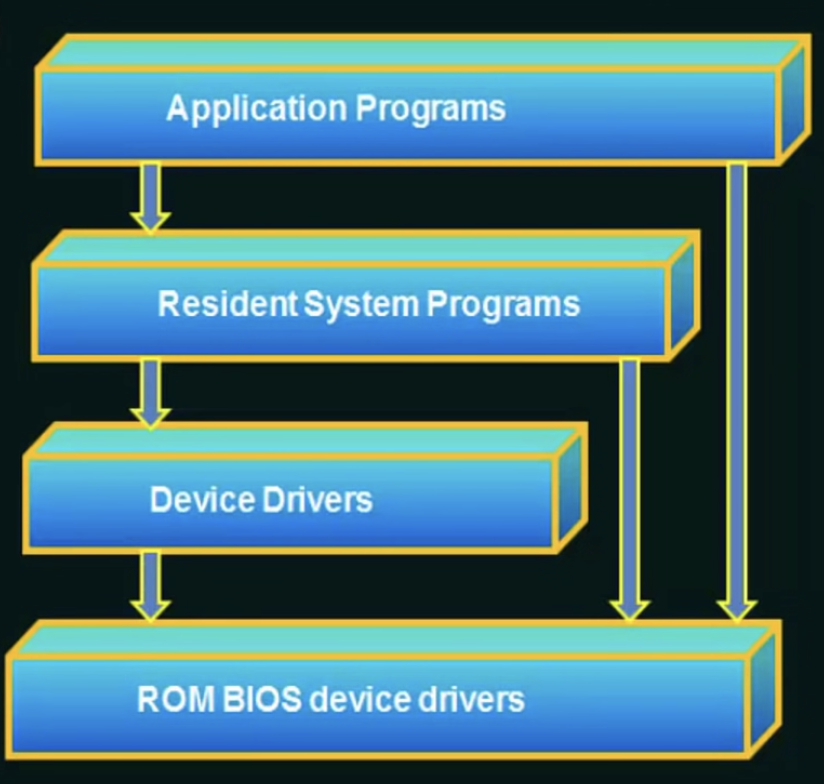

Simple Structure

MS-Dos is designed with this pattern. The arrows $A \Rightarrow B$ refers to “A has an access to B”. Let’s take a look at it from the bottom to the top

- ROM BIOS device drivers: We can think of it as hardwares

- Device Drivers

- Resident System Programs: It refers to a set of programs within an operating system (OS) that reside in memory and are readily available. These programs are part of the operating system, typically loaded into memory when the computer starts up, and they remain active during the runtime of the operating system. They are crucial for the proper functioning and management of the system, providing core system functionalities and services

- Application Programs

As we can see, almost every layer above has the access to layers below it, which means it is vulnerable to errant and malicious programs, causing the entire system to crash when the user program fails

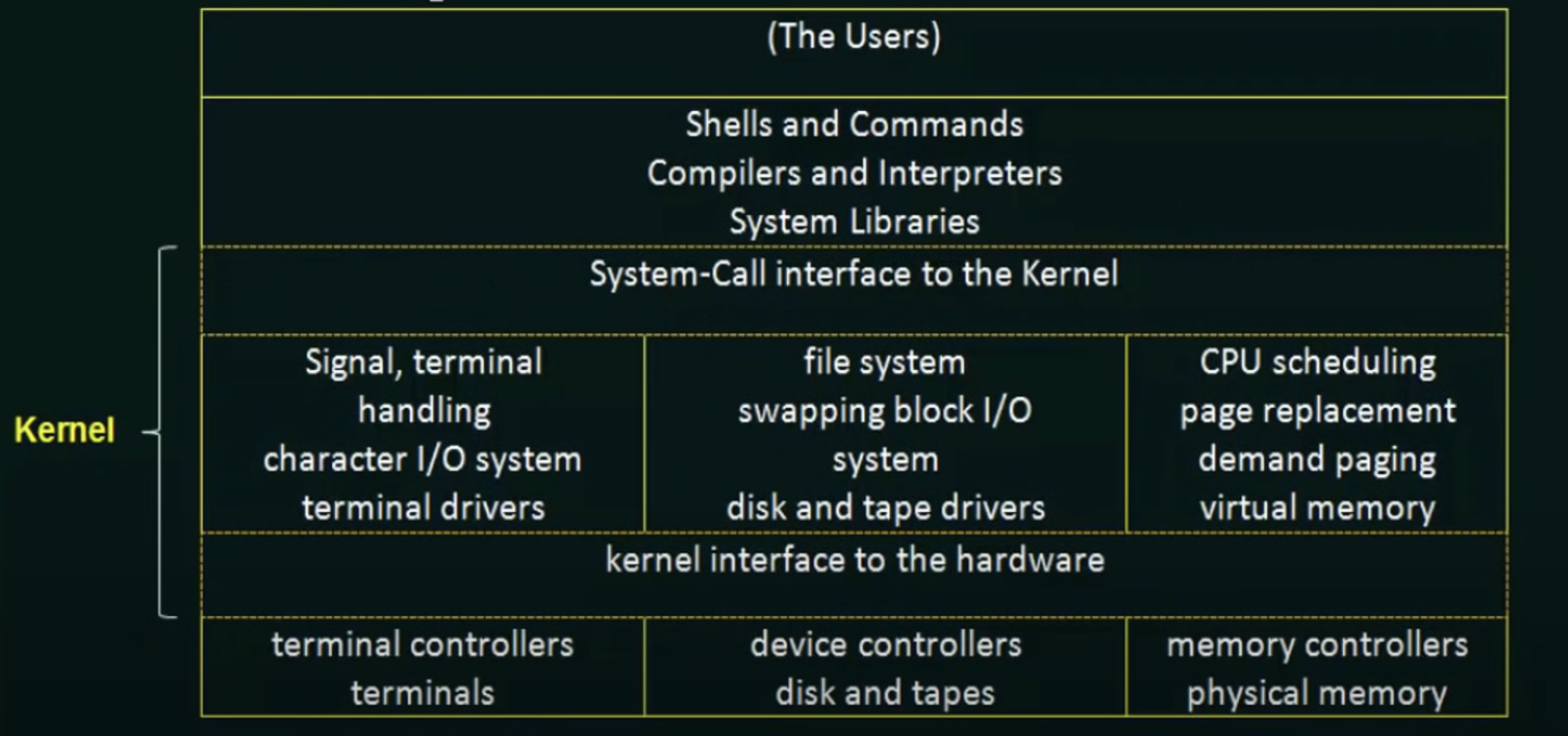

Monolithic Structure

Followed by Eearlier Unix operating system

- Everything above Hardware and below System-Call Interface is called Kernel

We can see that nearly all the functinalities are packed into one level called Kernel, which makes its implementation and maintenance very difficult. Let’s say if you want to add something or debug, you have to change or modify the entire thing

Layered Structure

Advantages

- Solves two issues in the above stuctures. It is easy to maintain and is not vulnerable

Disadvantages

- Hard to design

- It may not be efficient enough because when one layer wants to use the services provided by the layers below it, the request has to go down below each layer one by one. And by the time the service is actually provided, it may be late or it may not be very fast

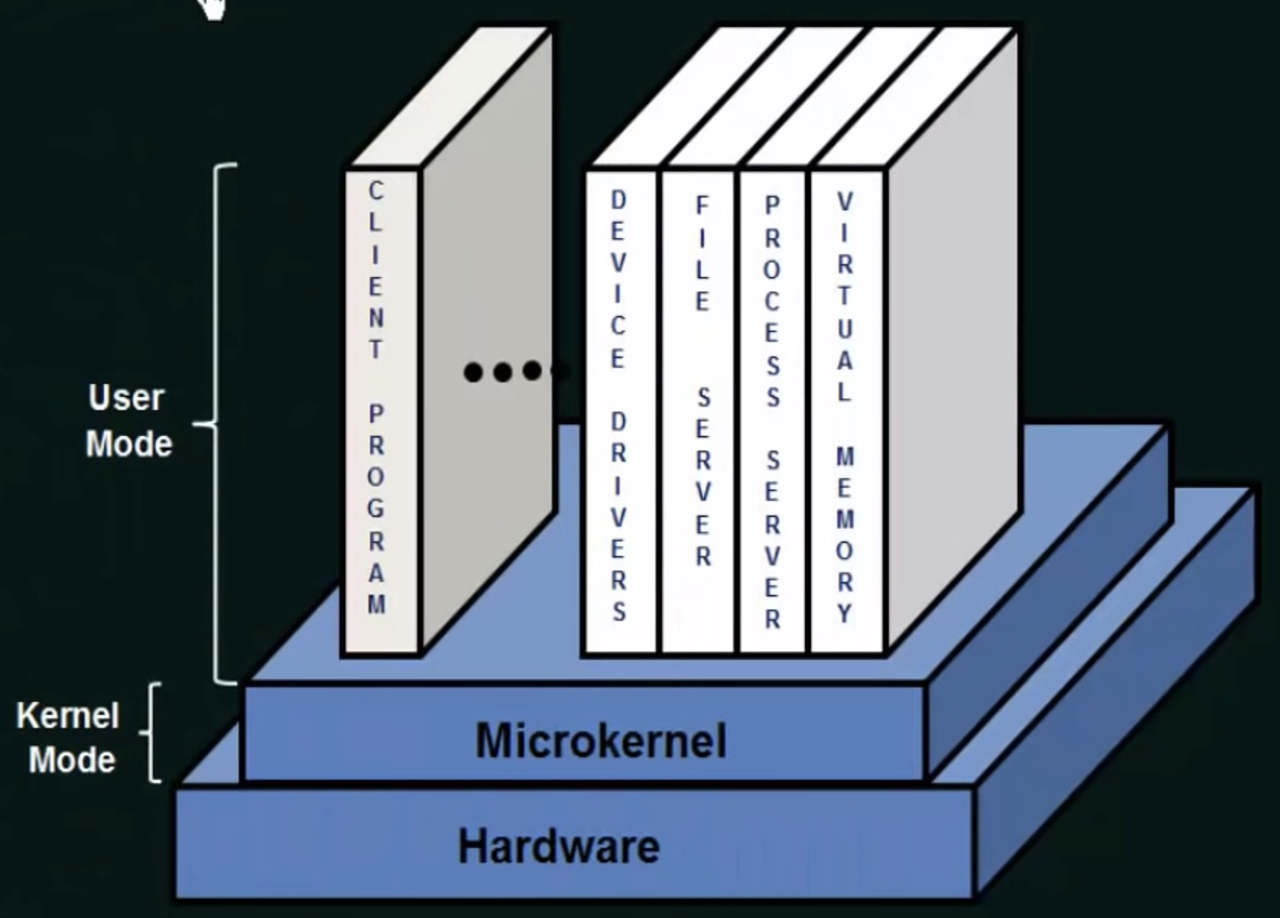

Microkernels

In this restrucure, the core is the Microkernel

Microkernel removes all the non-essential components from the kernel, and we implement them as system and user level programs. So here Microkernel will only provide the core functionalities of the kernel, and other functionalities like device driver, virtual memory are implemented as user level system programs

So the main function of this Microkernel is to provide a communication between the and client program and these services, which means there is a client program requesting for these services

The communication between the client program and system programs that provide services is made through with something known as Message Passing

Advantages

Most of the functions will be executed in the User Mode because the kernel is having only the core functionalities. So when the client program wants to access any of the services, it does not actually have to be run in the Kernel Mode, it can run in the user mode because most of the services are there as system programs.

In this case, the crashing problem of the entire system is not going to happen mostly

Disadvantages

- Microkernel can suffer from performance decrease due to the increase system function overhead. It is because of using Message Passing for communications. And since the communication can be done always, there could be a system overhead leading to a decreased performance

Modules

Modules means we follow up modular approach in structuring of the operating system

It takes use of object-orientated programming techniques to create modular kernel

- Core kernel only contains the core functionalities of the kernel

- Other functionalities are present in the form of modules, which will be loaded into kernel either at boot time or at run time

- No need to use Message Passing

Virtual Machine

Operating System Generation & System Boot

Operating System Generation

Operating System are designed to run on any of a class of machines at a variety of sites with a variety of peripheral configurations

This leads to Operating System Generation. It means that the system must then be configured or generated for each specific computer site, a process sometimes known as system generation (SYSGEN) is used for this

SYSGEN must find out:

- What CPU is to be used?

- How much memory is available?

- What devices are available?

- What operating-system options are desired?

so that it can help generating the operating system for a particular machine

System Boot

The procedure of starting a computer by loading the kernel is known as booting the system. In fact, however, hardwares themselves don’t know how to locate and load the kernel into the memory, which leads to the system boot.

On most computer systems, a small piece of code known as the bootstrap program or bootstrap loader locates the kernel and helps loading it into the memory.

The program is in the form of read-only memory (ROM), because the RAM is in an unknown state at system startup. ROM is convenient because it needs no initilization and cannot be infected by a computer virus

When the full bootstrap program has been loaded, it can traverse the file system to find the operating system kernel, load it into memory, and start its execution. It is only at this point that the system is said to be RUNNING

Process Management

Processes and Threads

Process: A process can be thought of as a program in execution (to run an application, you may need to run multiple processes)

Thread: A thread is the unit of execution within a process. A process can have anywhere from just one thread to many threads

Process States

As a process executes, it changes state

The state of a process is defined in part by the current activity of that process

Process states:

- NEW: The process is being created

- READY: The process is waiting to be assigned to a processor

- RUNNING: Instructions are being executed

- WAITING: The process is waiting for some events to occur (e.g. wait for an I/O completion or reception of a signal)

- TERMINATED: The process has finished execution

Process Control Block

Each process is represented in the operating system by a Process Control Block(PCB) — also called a Task Control Block

Process ID (pid): Shows the unique number or ID of a particular process, serves as an identifier

Process State

Program Counter: Indicates the address of the next instruction that has to be executed for that particular process

Registers: Registers that are being used by a particular process

CPU Scheduling Information: It has the priority of the processes, and has the pointer to the scheduling queue & other schedualing parameters

Memory Management Information: Memory that is being used by a particular process

Accounting Information: It keeps an account of certain things, like the resources that are being used by the particular process

I/O Status Information: The I/O devices that are being assigned to a particular process

Process Scheduling

Process scheduling is about handling multiprogramming & time sharing. Review:

The objective of multiprogramming is to have some process running at all times, to maximize CPU utilization

The objective of time sharing is to switch the CPU among processes so frequently that users can interact with each program while it is running

To meet these objectives, the process scheduler selects an available process (possibly from a set of several available processes) for program execution on the CPU

For a single-processor system, there will never be more than one running process

If there are more processes, the rest will have to wait until the CPU is free and can be rescheduled

To help process scheduling, we have Scheduling Queues

- JOB QUEUE: As processes enter the system, they are put into a job queue, which consists of all processes in the system

- READY QUEUE: The processes that are residing in main memory and are ready and waiting to execute are kept on a list called the ready queue

Processes go from Job Queue to Ready Queue

For a process executing in the CPU, it would

- Finish executing and change to the terminal state

- There’s a process with higher priority comming, so it has to be swapped out, then goes back to the Ready Queue

- It needs I/O devices, then it will be in I/O waiting queues to wait for available I/O devices and goes back to the Ready Queue afterwards

Context Switch

Interrupts cause the operating system to change a CPU from its current task and to run a kernel routine

Such operations happen frequently on general-purpose systems

When an interrupt occurs, the system needs to save the current CONTEXT of the process currently running on the CPU so that it can restore that context when its processing is done, essentially suspending the process and then resuming it

The contet is represented in the PCB (Process Control Block) of the process

Therefore, switching the CPU to another process requires performing a state save of the current process and a state restore of a different process. This task is known as a CONTEXT SWITCH

Context-switch time is pure overhead (overhead means the cost), because the system does no useful work while switching

Its speed varies from machine to machine, depending on the memory speed, the number of registers that must be copied, and the existence of special instructions (such as a single instruction to load or store all registers)

And typical speeds are a few milliseconds

Operations on Processes

Process Creation

A process may create several new processes, via a create-process system call, during the course of execution

The creating process is called a parent process, and the new processes are called the children of that process

When a process creates a new process, two possibilities exist in terms of execution:

- The parent continues to execute concurrently with its children

- The parent waits until some or all of its children have terminated

There are also two possibilities in terms of the address space of the new process:

- The child process is a duplicate of the parent process (it has the same program and data as the parent)

- The child process has a new program loaded into it